Posted by BritneyMuller

It's a brand-new decade, rich with all the promise of a fresh start and new beginnings. But does that mean you should be doing anything different with regards to your SEO?

In this Whiteboard Friday, our Senior SEO Scientist Britney Muller offers a seventeen-point checklist of things you ought to keep in mind for executing on modern, effective SEO. You'll encounter both old favorites (optimizing title tags, anyone?) and cutting-edge ideas to power your search strategy from this year on into the future.

Click on the whiteboard image above to open a high resolution version in a new tab!

Video Transcription

Hey, Moz fans. Welcome to another edition of Whiteboard Friday. Today we are talking about SEO in 2020. What does that look like? How have things changed?

Do we need to be optimizing for favicons and BERT? We definitely don't. But here are some of the things that I feel we should be keeping an eye on.

☑ Cover your bases with foundational SEO

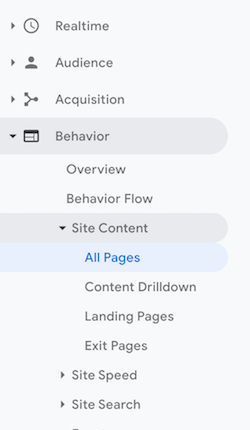

Titles, metas, headers, alt text, site speed, robots.txt, site maps, UX, CRO, Analytics, etc.

To cover your bases with foundational SEO will continue to be incredibly important in 2020, basic things like title tags, meta descriptions, alt, all of the basic SEO 101 things.

There have been some conversations in the industry lately about alt text and things of that nature. When Google is getting so good at figuring out and knowing what's in an image, why would we necessarily need to continue providing alt text?

But you have to remember we need to continue to make the web an accessible place, and so for accessibility purposes we should absolutely continue to do those things. But I do highly suggest you check out Google's Visual API and play around with that to see how good they've actually gotten. It's pretty cool.

☑ Schema markup

FAQ, Breadcrumbs, News, Business Info, etc.

Schema markup will continue to be really important, FAQ schema, breadcrumbs, business info. The News schema that now is occurring in voice results is really interesting. I think we will see this space continue to grow, and you can definitely leverage those different markup types for your website.

☑ Research what matters for your industry!

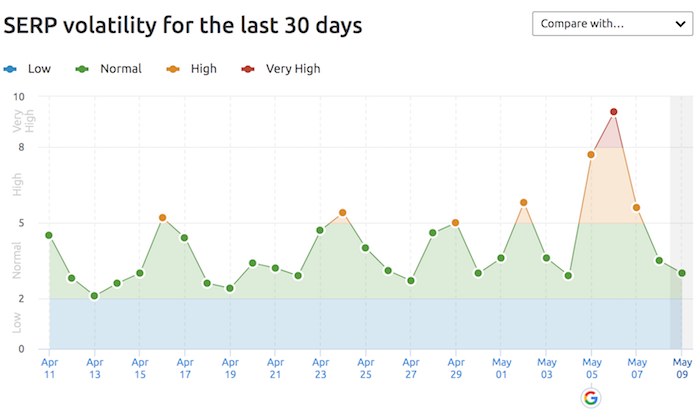

Just to keep in mind, there's going to be a lot of articles and research and information coming at you about where things are going, what you should do to prepare, and I want you to take a strategic stance on your industry and what's important in your space.

While I might suggest page speed is going to be really important in 2020, is it for your industry? We should still worry about these things and still continue to improve them. But if you're able to take a clearer look at ranking factors and what appears to be a factor for your specific space, you can better prioritize your fixes and leverage industry information to help you focus.

☑ National SERPs will no longer be reliable

You need to be acquiring localized SERPs and rankings.

This has been the case for a while. We need to localize search results and rankings to get an accurate and clear picture of what's going on in search results. I was going to put E-A-T here and then kind of cross it off.

A lot of people feel E-A-T is a huge factor moving forward. Just for the case of this post, it's always been a factor. It's been that way for the last ten-plus years, and we need to continue doing that stuff despite these various updates. I think it's always been important, and it will continue to be so.

☑ Write good and useful content for people

While you can't optimize for BERT, you can write better for NLP.

This helps optimize your text for natural language processing. It helps make it more accessible and friendly for BERT. While you can't necessarily optimize for something like BERT, you can just write really great content that people are looking for.

☑ Understand and fulfill searcher intent, and keep in mind that there's oftentimes multi-intent

One thing to think about this space is we've kind of gone from very, very specific keywords to this richer understanding of, okay, what is the intent behind these keywords? How can we organize that and provide even better value and content to our visitors?

One way to go about that is to consider Google houses the world's data. They know what people are searching for when they look for a particular thing in search. So put your detective glasses on and examine what is it that they are showing for a particular keyword.

Is there a common theme throughout the pages? Tailor your content and your intent to solve for that. You could write the best article in the world on DIY Halloween costumes, but if you're not providing those visual elements that you so clearly see in a Google search result page, you're never going to rank on page 1.

☑ Entity and topical integration baked into your IA

Have a rich understanding of your audience and what they're seeking.

This plays well into entities and topical understanding. Again, we've gone from keywords and now we want to have this richer, better awareness of keyword buckets.

What are those topical things that people are looking for in your particular space? What are the entities, the people, places, or things that people are investigating in your space, and how can you better organize your website to provide some of those answers and those structures around those different pieces? That's incredibly important, and I look forward to seeing where this goes in 2020.

☑ Optimize for featured snippets

Featured snippets are not going anywhere. They are here to stay. The best way to do this is to find the keywords that you currently rank on page 1 for that also have a featured snippet box. These are your opportunities. If you're on page 1, you're way more apt to potentially steal or rank for a featured snippet.

One of the best ways to do that is to provide really succinct, beautiful, easy-to-understand summaries, takeaways, etc., kind of mimic what other people are doing, but obviously don't copy or steal any of that. Really fun space to explore and get better at in 2020.

☑ Invest in visuals

We see Google putting more authority behind visuals, whether it be in search or you name it. It is incredibly valuable for your SEO, whether it be unique images or video content that is organized in a structured way, where Google can provide those sections in that video search result. You can do all sorts of really neat things with visuals.

☑ Cultivate engagement

This is good anyway, and we should have been doing this before. Gary Illyes was quoted as saying, "Comments are better for on-site engagement than social signals." I will let you interpret that how you will.

But I think it goes to show that engagement and creating this community is still going to be incredibly important moving forward into the future.

☑ Repurpose your content

Blog post → slides → audio → video

This is so important, and it will help you excel even more in 2020 if you find your top-performing web pages and you repurpose them into maybe be a SlideShare, maybe a YouTube video, maybe various pins on Pinterest, or answers on Quora.

You can start to refurbish your content and expand your reach online, which is really exciting. In addition to that, it's also interesting to play around with the idea of providing people options to consume your content. Even with this Whiteboard Friday, we could have an audio version that people could just listen to if they were on their commute. We have the transcription. Provide options for people to consume your content.

☑ Prune or improve thin or low-quality pages

This has been incredibly powerful for myself and many other SEOs I know in improving the perceived quality of a site. So consider testing and meta no-indexing low-quality, thin pages on a website. Especially larger websites, we see a pretty big impact there.

☑ Get customer insights!

This will continue to be valuable in understanding your target market. It will be valuable for influencer marketing for all sorts of reasons. One of the incredible tools that are currently available by our Whiteboard Friday extraordinaire, Rand Fishkin, is SparkToro. So you guys have to check that out when it gets released soon. Super exciting.

☑ Find keyword opportunities in Google Search Console

It's shocking how few people do this and how accessible it is. If you go into your Google Search Console and you export as much data as you can around your queries, your click-through rate, your position, and impressions, you can do some incredible, simple visualizations to find opportunities.

For example, if this is the rank of your keywords and this is the click-through rate, where do you have high click-through rate but low ranking position? What are those opportunity keywords? Incredibly valuable. You can do this in all sorts of tools. One I recommend, and I will create a little tutorial for, is a free tool called Facets, made by Google for machine learning. It makes it really easy to just pick those apart.

☑ Target link-intent keywords

A couple quick link building tactics for 2020 that will continue to hopefully work very, very well. What I mean by link-intent keywords is your keyword statistics, your keyword facts.

These are searches people naturally want to reference. They want to link to it. They want to cite it in a presentation. If you can build really great content around those link-intent keywords, you can do incredibly well and naturally build links to a website.

☑ Podcasts

Whether you're a guest or a host on a podcast, it's incredibly easy to get links. It's kind of a fun link building hack.

☑ Provide unique research with visuals

Andy Crestodina does this so incredibly well. So explore creating your own unique research and not making it too commercial but valuable for users. I know this was a lot.

There's a lot going on in 2020, but I hope some of this is valuable to you. I truly can't wait to hear your thoughts on these recommendations, things you think I missed, things that you would remove or change. Please let us know down below in the comments, and I will see you all soon. Thanks.

Video transcription by Speechpad.com

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

* This article was originally published here