Posted by sam.nemzer

SEO split testing is a relatively new concept, but it’s becoming an essential tool for any SEO who wants to call themselves data-driven. People have been familiar with A/B testing in the context of Conversion Rate Optimisation (CRO) for a long time, and applying those concepts to SEO is a logical next step if you want to be confident that what you’re spending your time on is actually going to lead to more traffic.

At Distilled, we’ve been in the fortunate position of working with our own SEO A/B testing tool, which we’ve been using to test SEO recommendations for the last three years. Throughout this time, we’ve been able to hone our technique in terms of how best to set up and measure SEO split tests.

In this post, I’ll outline five mistakes that we’ve fallen victim to over the course of three years of running SEO split tests, and that we commonly see others making.

What is SEO Split testing?

Before diving into how it’s done wrong (and right), it’s worth stopping for a minute to explain what SEO split testing actually is.

CRO testing is the obvious point of comparison. In a CRO test, you’re generally comparing a control and variant version of a page (or group of pages) to see which performs better in terms of conversion. You do this by assigning your users into different buckets, and showing each bucket a different version of the website.

In SEO split testing, we’re trying to ascertain which version of a page will perform better in terms of organic search traffic. If we were to take a CRO-like approach of bucketing users, we would not be able to test the effect, as there’s only one version of Googlebot, which would only ever see one version of the page.

To get around this, SEO split tests bucket pages instead. We take a section of a website in which all of the pages follow a similar template (for example the product pages on an eCommerce website), and make a change to half the pages in that section (for all users). That way we can measure the traffic impact of the change across the variant pages, compared to a forecast based on the performance of the control pages.

For more details, you can read my colleague Craig Bradford’s post here.

Common SEO Split Testing Mistakes

1. Not leaving split tests running for long enough

As SEOs, we know that it can take a while for the changes we make to take effect in the rankings. When we run an SEO split test, this is borne out in the data. As you can see in the below graph, it takes a week or two for the variant pages (in black) to start out-stripping the forecast based on the control pages (in blue).

It’s tempting to panic after a week or so that our test might not be making a difference, and call it off as a neutral result. However, we’ve seen over and over again that things often change after a week or two, so don’t call it too soon!

The other factor to bear in mind here is that the longer you leave it after this initial flat period, the more likely it is that your results will be significant, so you’ll have more certainty in the result you find.

A note for anyone reading with a CRO background — I imagine you’re shouting at your screen that it’s not OK to leave a test running longer to try and reach significance and that you must pre-determine your end date in order for the results to be valid. You’d be correct for a CRO test measured using standard statistical models. In the case of SEO split tests, we measure significance using Bayesian statistical methods, meaning that it’s valid to keep a test running until it reaches significance and you can be confident in your results at that point.

2. Testing groups of pages that don’t have enough traffic (or are dominated by a small number of pages)

The sites we’ve been able to run split tests on using Distilled ODN have ranged in traffic levels enormously, as have the site sections on which we’ve attempted to run split tests. Over the course of our experience with SEO split testing, we’ve generated a rule of thumb: if a site section of similar pages doesn’t receive at least 1,000 organic sessions per day in total, it’s going to be very hard to measure any uplift from your split test. If you have less traffic than that to the pages you’re testing, any signal of a positive or negative test result would be overtaken by the level of uncertainty involved.

Beyond 1,000 sessions per day, in general, the more traffic you have, the smaller the uplift you can detect. So far, the smallest effect size we’ve managed to measure with statistical confidence is a few percent.

On top of having a good amount of traffic in your site section, you need to make sure that your traffic is well distributed across a large number of pages. If more than 50 percent of the site section’s organic traffic is going to three or four pages, it means that your test is vulnerable to fluctuations in those pages’ performance that has nothing to do with the test. This may lead you to conclude that the change that you are testing is having an effect when it is actually being swayed by an irrelevant factor. By having the traffic well distributed across the site section, you ensure that these page-specific fluctuations will even themselves out and you can be more confident that any effect you measure is genuine.

3. Bucketing pages arbitrarily

In CRO tests, the best practice is to assign every user randomly into either the control and variant group. This works to ensure that both groups are essentially identical, because of the large number of users that tends to be involved.

In an SEO split test, we need to apply more nuance to this approach. For site sections with a very large number of pages, where the traffic is well distributed across them, the purely random approach may well lead to a fair bucketing, but most websites have some pages that get more traffic, and some that get less. As well as that, some pages may have different trends and spikes in traffic, especially if they serve a particular seasonal purpose.

In order to ensure that the control and variant groups of pages are statistically similar, we create them in such a way that they have:

- Similar total traffic levels

- Similar distributions of traffic between pages within them

- Similar trends in traffic over time

- Similarity in a range of other statistical measures

4. Running SEO split tests using JavaScript

For a lot of websites, it’s very hard to make changes, and harder still to split test them. A workaround that a lot of sites use (and that I have recommended in the past), is to deploy changes using a JavaScript-based tool such as Google Tag Manager.

Aside from the fact that we’ve seen pages that rely on JavaScript perform worse overall, another issue with this is that Google doesn’t consistently pick up changes that are implemented through JavaScript. There are two primary reasons for this:

- The process of crawling, indexing, and rendering pages is a multi-phase process — once Googlebot has discovered a page, it first indexes the content within the raw HTML, then there is often a delay before any content or changes that rely on JavaScript are considered.

- Even when Googlebot has rendered the JavaScript version of the page, it has a cut-off of five seconds after which it will stop processing any JavaScript. A lot of JavaScript changes to web pages, especially those that rely on third-party tools and plugins, take longer than five seconds, which means that Google has stopped paying attention before the changes have had a chance to take effect.

This can lead to inconsistency within tests. For example, if you are changing the format of your title tags using a JavaScript plugin, it may be that only a small number of your variant pages have that change picked up by Google. This means that whatever change you think you’re testing doesn’t have a chance of demonstrating a significant effect.

5. Doing pre/post tests instead of A/B tests

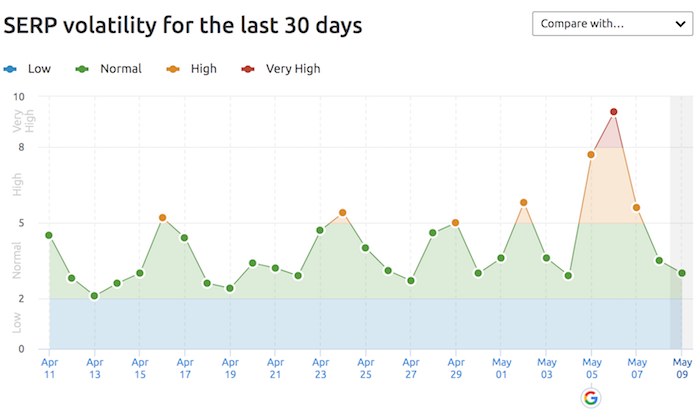

When people talk colloquially about SEO testing, often what they mean is making a change to an individual page (or across an entire site) and seeing whether their traffic or rankings improve. This is not a split test. If you’re just making a change and seeing what happens, your analysis is vulnerable to any external factors, including:

- Seasonal variations

- Algorithm updates

- Competitor activity

- Your site gaining or losing backlinks

- Any other changes you make to your site during this time

The only way to really know if a change has an effect is to run a proper split test — this is the reason we created the ODN in the first place. In order to account for the above external factors, it’s essential to use a control group of pages from which you can model the expected performance of the pages you’re changing, and know for sure that your change is what’s having an effect.

And now, over to you! I’d love to hear what you think — what experiences have you had with split testing? And what have you learned? Tell me in the comments below!

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

* This article was originally published here

No comments:

Post a Comment